Devlog #5 - Training My Drone: Battery Awareness

After completing the initial version of the drone agent and successfully training it to collect a target, I wanted to push the simulation further. The goal this time was to increase complexity and make it more realistic.

What's New?

Battery Mechanic

One of the major additions in this phase is the battery system. The drone now has a limited amount of energy drains with every actions it takes. This introduces a new challenge: the agent must not only seek out the target, but also manage its energy efficiently to avoid running out of battery mid-task.

Here's how it works:

- The drone starts with a full battery.

- Every action taken (step, rotation) consumes a small amount of energy.

- If the battery reaches zero, the episode ends, and the drone gets a negative reward.

- A charging station is placed in the environment, and colliding with it allows the drone to recharge and continue its task.

Code Breakdown: Battery Mechanic

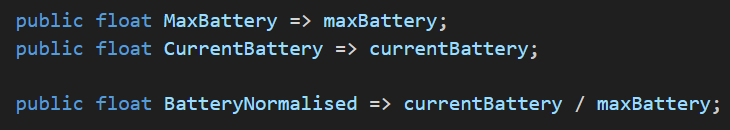

To keep things modular and accessible, I created public properties for battery stats:

This allows the agent scripts to read battery values without exposing internal logic.

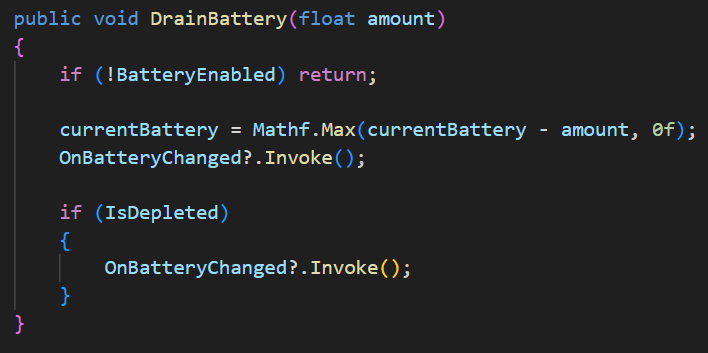

The battery is drained inside the OnActionReceived() method of the agent.

Every action the agent takes now comes at a cost. This simple change added a whole new layer of strategy to the agent's behaviour.

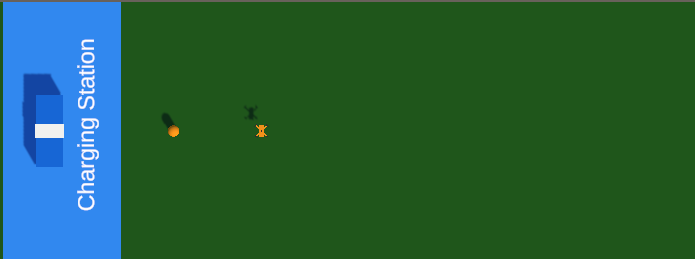

A Larger Environment

In addition to the battery system, I also expanded the training area. The original environment was small and highly predictable.

In this update:

- The area is much larger, encouraging more exploration.

- Charging stations and targets are placed farther apart.

- This forces the drone to think long-term: it must decide whether to pursue the target, recharge or risk depleting its battery mid-flight.

New Observations

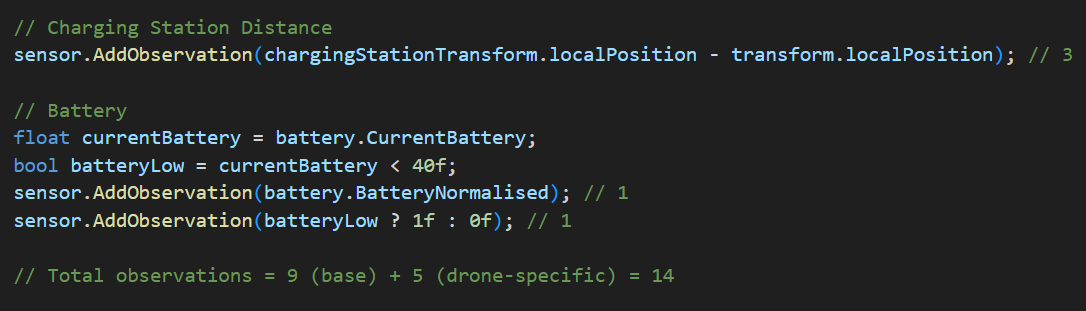

As the environment becomes more complex, so must the agent's awareness of itself and its surroundings. In this phase, I updated the vector observations to reflect the new battery mechanic and environmental features. These observations are crucial for helping the agent make informed decisions, especially when battery management has been added.

What's New in the Observation Space?

In addition to the base observations, I set up in the ML-Agents Setup Devlog (such as position and velocity), the drone now gathers battery-aware observations.

Added Observations:

Total Observations:

- Base agent observations: 9

- The agent's local position (3)

- The agent's velocity (3)

- The agent's angular velocity (3)

- Battery & charging specific observations: 5

- Distance to charging station (3)

- Normalised battery level (1)

- Battery low indicator (1)

- Total = 14 vector observations

Why These Observations Matter

By feeding the agent both quantitative data (exact battery level and distance to the charger) and qualitative flags (whether the battery is low), the agent is now better equipped to:

- Decide when to recharge instead of blindly pursuing the target.

- Plan efficient paths that account for its battery level.

- Avoid battery depletion mid-navigation.

Reward Shaping

With new mechanics like the battery system and charging station in place, the next challenge was to guide the agent's learning through rewards. To encourage smart battery management, I implemented a combination of distance-based and collision-based reward shaping techniques.

Distance-Based Reward Shaping

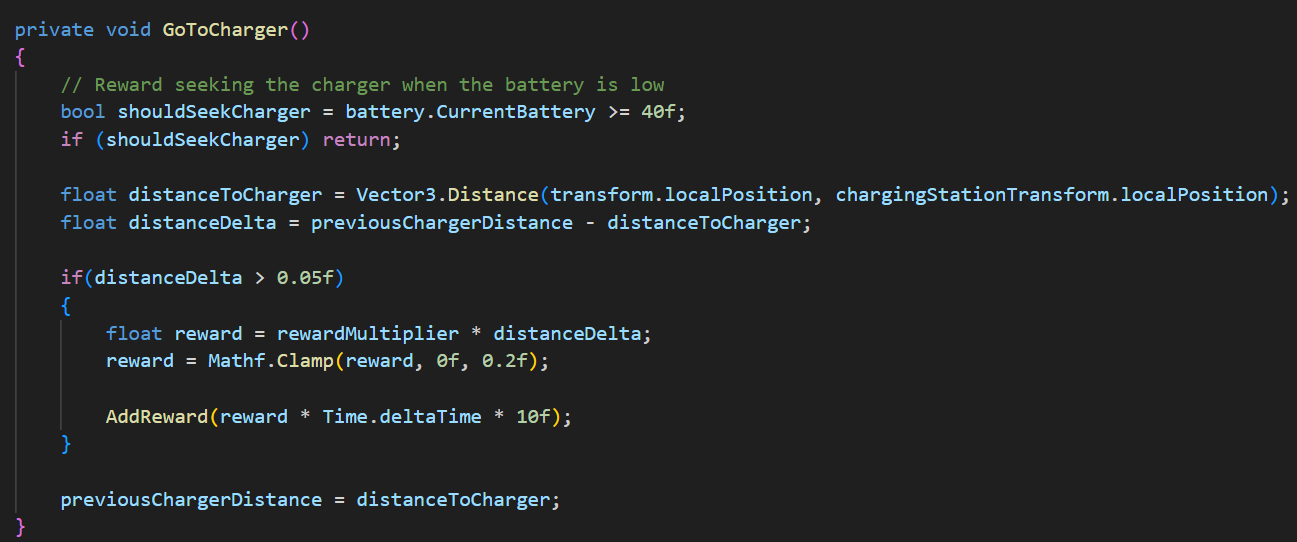

When the drone's battery is low, I want it to move towards the charging station. To help it learn this behaviour, I wrote a function called GoToCharger(), which is called in the OnActionReceived() method. The code below rewards the agent for reducing the distance to the charger.

This subtle, shaped reward is only triggered when the battery drops below 40%. If the drone gets closer to the charger, it earns a small positive reward, reinforcing the idea that closing the gap is a good thing.

- This helps the agent build a habit of recharging before it's too late, without hardcoding exact behaviour.

Collision Reward Shaping

Charging Station Collision

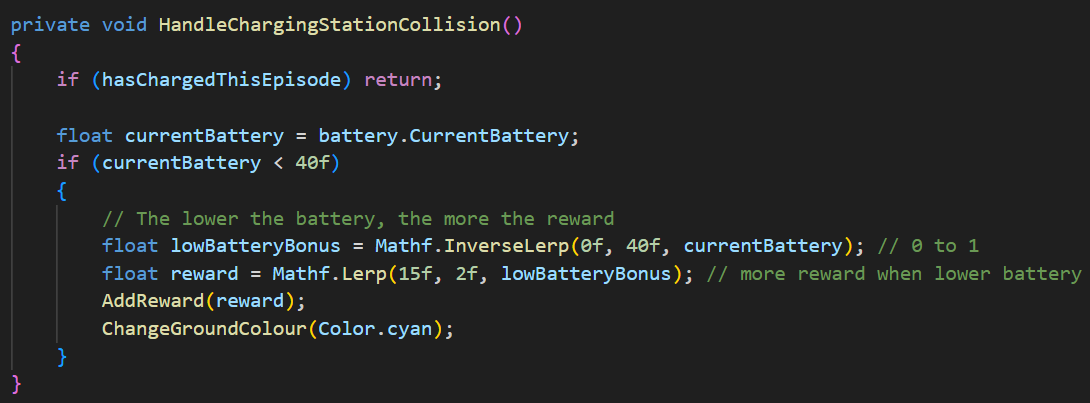

Getting near the charger is great, but reaching it and recharging deserves a bigger reward. That's where the HandleChargingStationCollision() functions comes in:

In this setup:

- The lower the battery, the greater the reward for reaching the charger.

- This encourages the drone to delay charging slightly for optimal rewards, but not too long, or it risks running out of battery entirely.

Target Collision

In addition to teaching the drone how to manage its energy, I also updated the reward system for successfully reaching the target. The new logic now factors in how efficiently the task was completed.

Efficient Success is Worth More

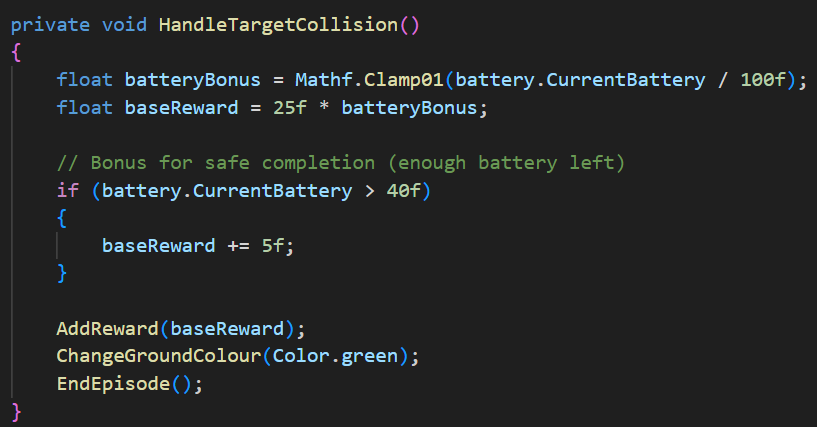

Here's the logic behind the HandleTargetCollision() function:

Let's break it down:

- Battery-aware reward: The more battery the agent has left upon reaching the target, the higher the reward which is given through a battery bonus.

- Bonus for safe completion: If the agent ends the task with over 40% battery, it gets an extra 5 points.

Conclusion

The drone agent will now be capable of reaching its goal. By expanding the environment, adding a battery mechanic and shaping rewards around battery level and goal completion, the agent can now learn to balance priorities dynamically.

What's Next?

To push this further, here's what I have planned for the next phase:

- Custom PPO Config: I'll be creating a tailored Proximal Policy Optimisation (PPO) training configuration to better match the complexity of the new environment. This includes:

- Increased training steps.

- Adjusted learning rate.

- Tweaked batch size and buffer settings.

- Curiosity settings for more exploration.

UAV Search & Rescue Simulation

Training Search & Rescue Drones with Reinforcement Learning.

| Status | In development |

| Author | Shivani |

| Genre | Simulation |

More posts

- NEW: UAV Search & Rescue Simulation ProgressAug 18, 2025

- NEW: Drone Learns To Complete Search & Rescue MissionsAug 17, 2025

- Devlog #8 - Evaluating Trained ModelsJul 21, 2025

- Devlog #7 - Training My Drone: Curriculum Learning for Search TasksJul 15, 2025

- Devlog #6 - Training My Drone: Custom PPO ConfigurationJul 15, 2025

- Devlog #4 - Training My Drone: Target Collection BasicsJun 26, 2025

- Devlog #3 - Training My Drone: ML-Agents SetupJun 26, 2025

Leave a comment

Log in with itch.io to leave a comment.